Bayesian Interrupted Time Series#

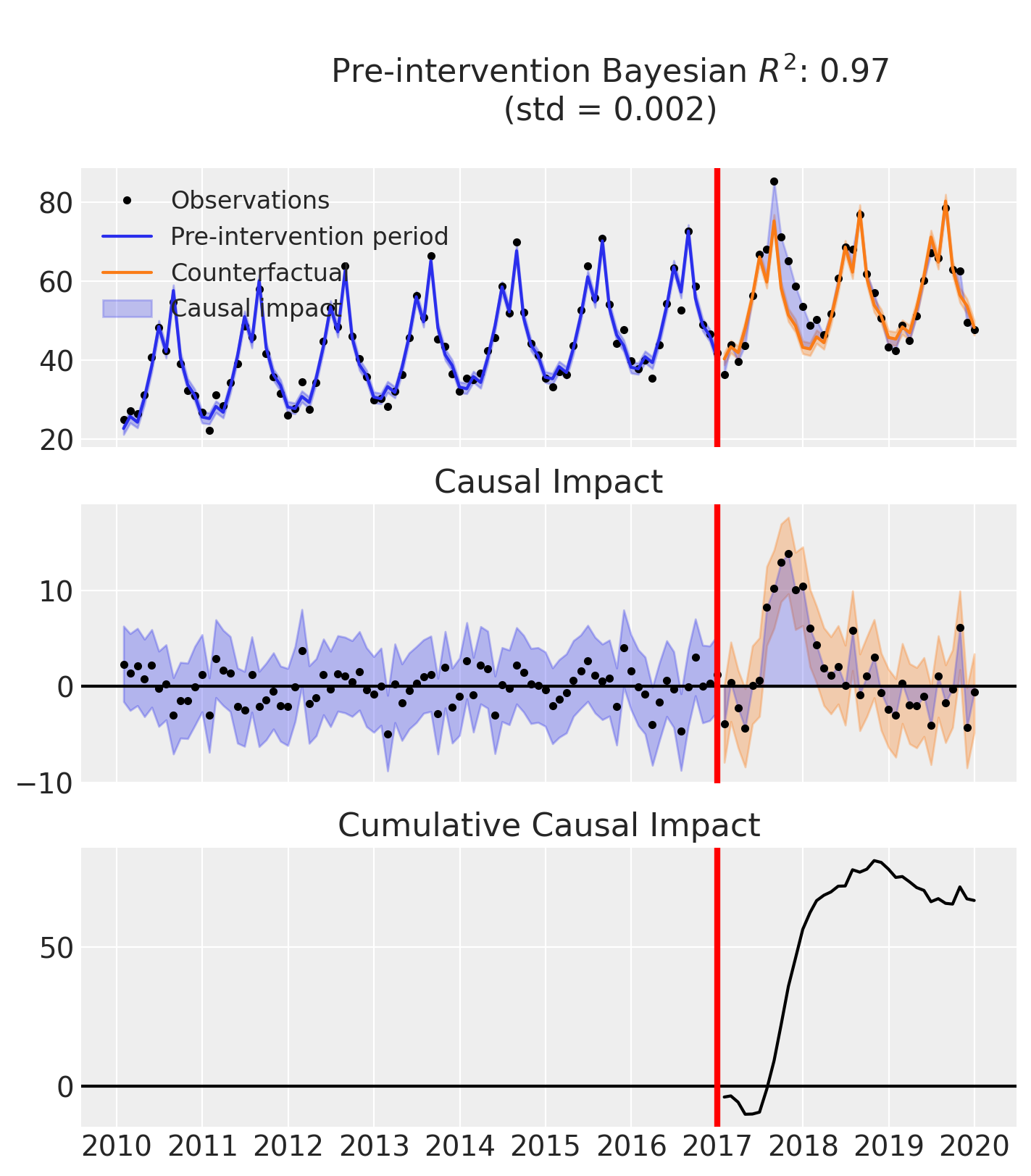

Interrupted Time Series (ITS) analysis is a powerful approach for estimating the causal impact of an intervention or treatment when you have a single time series of observations. The key idea is to compare what actually happened after the intervention to what would have happened in the absence of the intervention (the “counterfactual”). To do this, we train a statistical model on the pre-intervention data (when no treatment has occurred) and then use this model to forecast the expected outcomes into the post-intervention period as-if treatment had not occurred. The difference between the observed outcomes and these model-based counterfactual predictions provides an estimate of the causal effect of the intervention, along with a measure of uncertainty if using a Bayesian approach.

What do we mean by causal impact in Interrupted Time Series?#

In the context of interrupted time series analysis, the term causal impact refers to the estimated effect of an intervention or event on an outcome of interest. We can break this down into two components which tell us different aspects of the intervention’s effect:

The Instantaneous Bayesian Causal Effect at each time point is the difference between the observed outcome and the model’s posterior predictive distribution for the counterfactual (i.e., what would have happened without the intervention). This is not just a single number, but a full probability distribution that reflects our uncertainty.

The Cumulative Bayesian Causal Impact is the sum of these instantaneous effects over the post-intervention period, again represented as a distribution.

Let \(y_t\) be the observed outcome at time \(t\) (after the intervention), and \(\tilde{y}_t\) be the model’s counterfactual prediction for the same time point. Then:

Instantaneous effect: \(\Delta_t = y_t - \tilde{y}_t\)

Cumulative effect (up to time \(T\)): \(C_T = \sum_{t=1}^T \Delta_t\)

In Bayesian analysis, both \(\tilde{y}_t\) and \(\Delta_t\) are distributions, not just point estimates.

Why does this matter for decision making?#

These metrics allow you to answer questions like:

“How much did the intervention change the outcome, compared to what would have happened otherwise?”

“What is the total effect of the intervention over time, and how certain are we about it?”

By providing both instantaneous and cumulative effects, along with their uncertainty, you can make more informed decisions and better understand the impact of your interventions.

Interrupted Time Series example#

import arviz as az

import pandas as pd

import causalpy as cp

%load_ext autoreload

%autoreload 2

%config InlineBackend.figure_format = 'retina'

seed = 42

Load data

df = (

cp.load_data("its")

.assign(date=lambda x: pd.to_datetime(x["date"]))

.set_index("date")

)

treatment_time = pd.to_datetime("2017-01-01")

df.head()

| month | year | t | y | |

|---|---|---|---|---|

| date | ||||

| 2010-01-31 | 1 | 2010 | 0 | 25.058186 |

| 2010-02-28 | 2 | 2010 | 1 | 27.189812 |

| 2010-03-31 | 3 | 2010 | 2 | 26.487551 |

| 2010-04-30 | 4 | 2010 | 3 | 31.241716 |

| 2010-05-31 | 5 | 2010 | 4 | 40.753973 |

Run the analysis

Note

The random_seed keyword argument for the PyMC sampler is not necessary. We use it here so that the results are reproducible.

result = cp.InterruptedTimeSeries(

df,

treatment_time,

formula="y ~ 1 + t + C(month)",

model=cp.pymc_models.LinearRegression(sample_kwargs={"random_seed": seed}),

)

Show code cell output

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [beta, sigma]

Sampling 4 chains for 1_000 tune and 1_000 draw iterations (4_000 + 4_000 draws total) took 2 seconds.

The rhat statistic is larger than 1.01 for some parameters. This indicates problems during sampling. See https://arxiv.org/abs/1903.08008 for details

Sampling: [beta, sigma, y_hat]

Sampling: [y_hat]

Sampling: [y_hat]

Sampling: [y_hat]

fig, ax = result.plot()

result.summary()

==================================Pre-Post Fit==================================

Formula: y ~ 1 + t + C(month)

Model coefficients:

Intercept 23, 94% HDI [21, 24]

C(month)[T.2] 2.9, 94% HDI [0.88, 4.8]

C(month)[T.3] 1.2, 94% HDI [-0.82, 3.1]

C(month)[T.4] 7.2, 94% HDI [5.2, 9.1]

C(month)[T.5] 15, 94% HDI [13, 17]

C(month)[T.6] 25, 94% HDI [23, 27]

C(month)[T.7] 18, 94% HDI [16, 20]

C(month)[T.8] 33, 94% HDI [32, 35]

C(month)[T.9] 16, 94% HDI [14, 18]

C(month)[T.10] 9.2, 94% HDI [7.3, 11]

C(month)[T.11] 6.3, 94% HDI [4.4, 8.2]

C(month)[T.12] 0.61, 94% HDI [-1.3, 2.5]

t 0.21, 94% HDI [0.19, 0.23]

sigma 2, 94% HDI [1.7, 2.3]

As well as the model coefficients, we might be interested in the estimated causal impact of the intervention over time - what we called the instantaneous Bayesian causal effect above. The post intervention causal impact estimates are contained in the post_impact attribute, which is of type xarray.DataArray. We can take a look at what this looks like, and we can see that it consists of 3 dimensions: chain, draw, and time (obs_ind). The chain and draw dimensions are used to store the samples from the posterior distribution, while the obs_ind dimension corresponds to the time points in the time series.

We have already seen a visual representation of this - the middle plot (see above) generated when calling the plot method. But often we would like to reduce this down to a simpler estimate. We can do this by various aggregation methods.

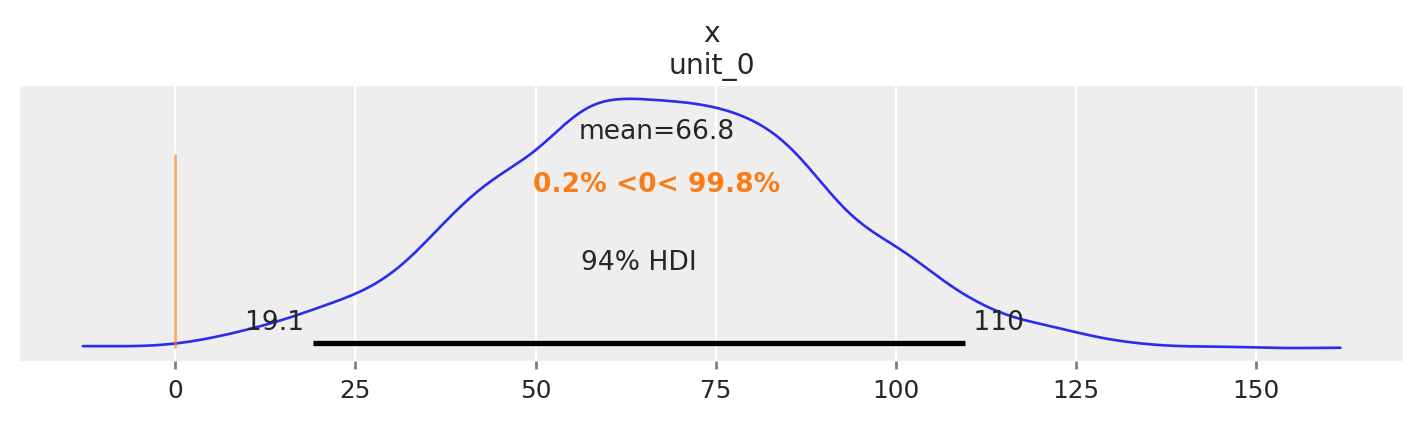

If we wanted to evaluate the total causal impact (or what we called the cumulative Bayesian causal effect) of the intervention over the whole post-intervention period, we can use the sum method. This will sum the post-intervention impact estimates across all time points in the post-intervention period.

az.plot_posterior(

result.impact.sel(period="post").sum("obs_ind"),

ref_val=0,

hdi_prob=0.94,

round_to=3,

figsize=(7, 2),

);

So we could say that the most credible total causal impact of the intervention is around 66, with a 94% credible interval of around 20 to 110.

And if we want precise numerical and summary statistics of that, we can use the arviz.summary function:

az.summary(result.impact.sel(period="post").sum("obs_ind"), kind="stats")

| mean | sd | hdi_3% | hdi_97% | |

|---|---|---|---|---|

| x[unit_0] | 66.751 | 23.915 | 19.07 | 109.525 |

Of course, if we wanted to query the estimated impact over a specific time period, we can leverage the fact that this is an xarray.DataArray and we can use the sel method to select the time points we are interested in. We will leave this as an exercise for the reader.

Moving on, it would also be possible to look at the mean post-intervention impact estimates, which would give us the average impact of the intervention over the post-intervention period. This can be done using the mean method and would equate to \(\bar{C_T} = \Big[ \sum_{t=1}^T \Delta_t \Big] / T\)

az.summary(result.impact.sel(period="post").mean("obs_ind"), kind="stats")

| mean | sd | hdi_3% | hdi_97% | |

|---|---|---|---|---|

| x[unit_0] | 1.854 | 0.664 | 0.53 | 3.042 |

Warning

Care must be taken with the mean causal impact statistic \(\bar{C_T}\). It only makes sense to use this statistic if it looks like the intervention had a lasting (and roughly constant) effect on the outcome variable. If the effect is transient (like in the example here), then clearly there will be a lot of post-intervention period where the impact of the intervention has ‘worn off’. If so, then it will be hard to interpret the mean impacts real meaning.

But if there was a roughly constant impact of the intervention over the post-intervention period, then the mean causal impact can be interpreted as the mean impact of the intervention (per time point) over the post-intervention period.

Similarly, if we wanted, we would also be able to query the estimated cumulative impact of the intervention over the post-intervention period by instead looking at the post_impact_cumulative attribute, rather than the post_impact attribute.